Processes, Implementation, Signals & Threads

by Vincent

Van, Angie Yen, and Iverin Hui

Process vs. Thread Descriptors

| Descriptor Characteristics |

New Process |

New Thread |

| pid |

D |

S |

| ppid |

D |

S |

| accounting* |

C |

S/C |

| -name*, priority* |

C |

C |

| memory state |

C |

S |

| stack* |

C |

D |

| register* |

C |

D |

| state* |

C |

D |

| event handler |

C |

S |

| file table |

C |

S |

| file structure |

S |

S |

D = different, S = same /

shared, C = copied

* = included in Thread Descriptor

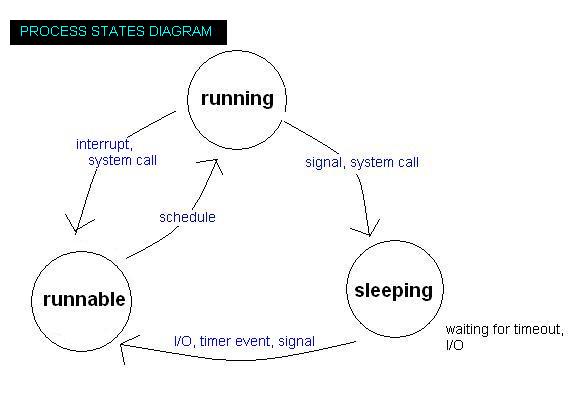

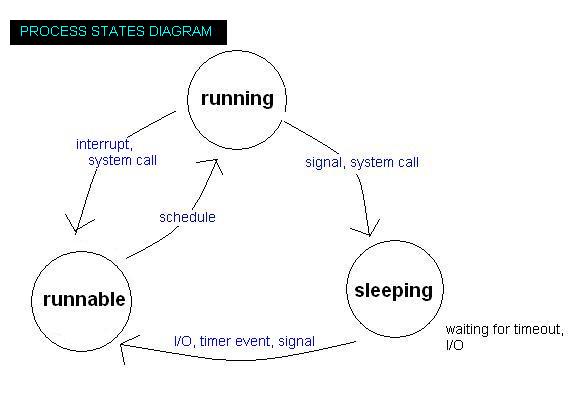

Process Implementation

How does the OS multiplex the machine among application

processes?

--->"hardware process" (book lingo)

"Most" of the time, the CPU is running application code

System calls give the OS control; trap instructions (interrupt-like)

|

Interrupts give OS control; timer interrupt goes off periodically

Hardware saves register state on a stack

OS moves register state into the process descriptor

|

|

OS code executes

OS decides next process to run

|

Restore memory state

Restore the registers

|

|

Process runs

| | |

Signals & Asynchronous Events

Signal: notifies a process of an asynchronous event

Asynchronous: an event that happens independently of the process's

code

Examples: killing uncooperative process (infinite loop), sleeping

indefinitely

How should we implement signals?

With files? *NO*

But let's try...

Every process has a file called /proc/pid/signal

Process periodically checks this file for data

If the file is nonempty, exit()

Problem with this

implementation:

==>NOT ROBUST

|

(If the process goes into an infinite loop, it can't check the

file.

Since this puts the process in charge of checking for signals :

BAD)

|

We're looking for a way to kill a process

that's out of control. So we can't use anything the process is in charge

of.

Signal syscalls

int sigaction

(int sig, struct sigaction *sa, ...)

==>this function sets up a

handler for a signal in the current process

The struct sigaction

*sa:

struct sigaction

{

void(*sa_handler) (int);

// func

in your code that should be called when the sig

happens

};

Signal Keywords:

sig =

SIGKILL - kill process

SIGSTOP - make process sleep

SIGSEGV - dereferenced a NULL

pointer or other memory error ("segmentation violation")

SIGHUP - output file closed

SIGCHLD - a child process exited

(default is ignored)

int kill (pid_t,

int sig)

==>sends a signal

Why use a signal for a SEGV?

Hardware knows an error occured, but

software does not.

Want to clear up zombie processes?

|

A. Wait for all children

B. OR design a SIGCHLD handler |

An example

handler:

void handle_child (int sig)

{

waitpid(0,0,0)

} NO -malloc

-write (because the memory state cannot be changed)

int main()

{

struct sigaction sa;

sa sa_handler

= handle child...;

sigaction(SIGCHLD, &sa,

..)

}

Suppose that process A calls kill(p, SIGHUP); and process B also calls kill(p, SIGHUP);?

|

We need a struct sigaction to

guarantee delivery (even if 2 signals are very close in time)

Old way:

race condition, unreliable, success depends on how close signals

are in time |

Threads

Processes are isolated (self-contained)

Threads are like non-isolated processes

Threads decouple processing

- execution - from state -

memory/files

Multiple virtual processors in the same program & memory space

simultaneously

Processes: independent memory state and run

state

Threads: share memory state

Advantages

+ share common data -> less

copying overhead

+ share memory (easier communication, work on shared

data structures)

+ take advantage of multiple CPUs' power

Disadvantages

- synchronization (multiple

execution engines changing the same memory state simultaneously)

-

race conditions!

- locking, makes programming instantly

harder

Generally, threads are used...

to deal with lots of data

because I/O is so slow! (overlap I/O wake)