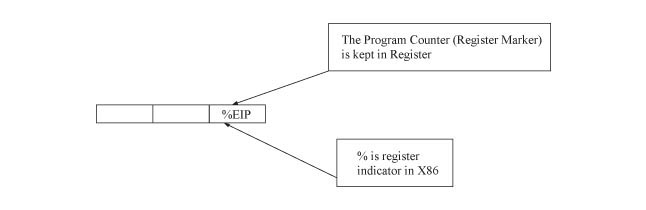

CS 111Scribe Notes for 4/11/05by Marina Cholakyan, Hyduke Noshadi, Sepehr Sahba and Young ChaProcessesWhat is a process?A process is a running instance of a program. The Web browser you're using to read these notes, for example, is a program: a sequence of machine instructions. The program becomes a process when it starts running, and develops some execution state. A computer can have multiple processes running the same program simultaneously. A process has a program counter (or, equivalently, instruction pointer) specifying the next instruction to execute, and a set of other resources too:

In our first lecture, we took a look at a simple C program that accomplished two tasks -- counting to five, and printing "Hello". Looking at this program as an "operating system" running two "processes", we can find several of these process features. (The "kernel" is in black in this figure; the two processes in orange and purple.)

int main (int argc, char *argv[])

{

int state = 0; // Kernel state: Current Process

int x = 0; // 1st process Global Variable

int printed = 0; // 2nd process Global Variable

while (1) {

if (state % 2 == 0) {

if (x < 5) // 1st process code

x++;

} else {

if (!printed) { // 2nd process code

printf("Hello");

printed = 1;

}

}

state++; // Round-robin "process scheduler"

}

}

We can also compare a process with a Turing Machine.

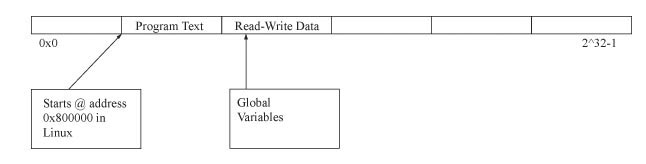

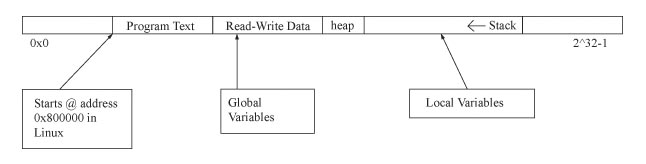

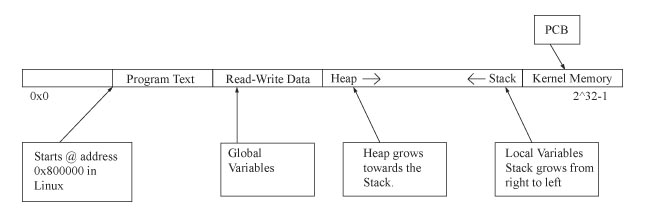

Memory Layout of the Process in x86 Registers Stack (LastInFirstOut)We need to keep local variables that are used during the running program somewhere in memory. Where can we keep Local Variables in memory? To place Local Variables where Global Variables are kept is a bad idea. Here is why. Thought Experiment: Let's treat local variables like global variables. That is, every function will reserve some space in the global variable area (Read-Write Data) for its local variables. What's wrong? Recursion: Functions that can call themselves! When a function calls itself (see below for an example), the function is effectively running twice, and each copy needs its own local variables. Thus, we need to allocate the function space in the different location. All modern machines do this with a stack.  The stack is an area of memory reserved for function arguments

and local variables. The stack is allocated a function at a time: when we

call a function, we push more space onto the stack to hold that

function's local variables; and when the function returns, we pop

its local variable space off the stack. The architecture has a special

register, called the stack pointer ( Let's take a look at a simple recursive function -- namely, Factorial -- and its pseudo-assembly code.

When the function is called:

These steps are repeated for reach function call.  Why do stacks grow downwards? Because it is much more natural and

convenient to refer to arguments and local variables with positive

offsets, such as The stack assumes that function never returns more than once. It is a feature that almost every programming language has. (There are functions in Scheme that could return more than once.) The reason can be described as the following: When a function returns all the local variables and also the state that the function was in it will be deleted. Therefore that functions state and variables will not exist any more. Heap (Dynamic Memory Allocation)Memory Layout (4 GB)  Let's introduce another section of memory which is called heap. In

the heap we store dynamically allocated memories. Dynamic memory is

being used to limit the process resource usage dynamically based on

availability. We use the following commands to allocate and free memory.

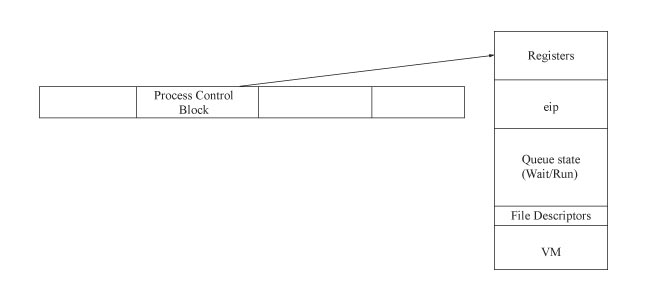

In edition we also have to store the register state and the program counter. The main reason is that we must be able to run more than one process at a time. Let's introduce the Process Control Block. It is located in the kernel memory and has the following structure Kernel Memory (Process Control Block)  Scheduling and ProcessesWhat is a scheduler? RUN QUEUE: Set of processes that are ready to go. "Ready to go" status does not mean that the process is 'useful'.

We generally want a small run queue (minimal amount of programs on it). WAIT QUEUE: A queue holding processes that are waiting for some event to happen. When the event happens, the process will be moved to the run queue so it can run. There are many wait queues, one per event that a process can wait for. For instance, we can have a wait queue for the following: 1) Other processes to finish 2) Input devices 3) Output devices 4) Inter-process communication 5) Timers, and so forth. Goal of RUN QUEUE and WAIT QUEUE: Blocking vs. Non-Blocking (System Calls)Blocking: doesn't return until finished (forces process to wait until done). A blocking system call might put a process on a wait queue, if the operation can't complete right away. Non-blocking: returns an error, such as EAGAIN, if the system can't complete the system call right away. This doesn't force the process to wait. Non-blocking system calls never cause a process to be put on a wait queue. This lets the process itself decide how to react if an operation can't complete right away. For instance, the process might go ahead and try to do something else. Input DevicesExamples of Input Devices: disk, keyboard, joystick... CLOCK: interrupt processor N times a second, kernel takes control. Timer Wait QueueThere are many ways to implement run queues and wait queues, from simple linked lists to complicated ring data structures and so forth. To get a feeling, let's take a look at how a timer wait queue might be implemented. A process goes onto the timer wait queue to wait until a certain time -- maybe 1 second from now, maybe 20 hours from now. Different processes on the timer wait queue are waiting for different times. How might we implement this?

Context SwitchSwitching the CPU to another process requires saving the state of the old process and loading the saved state of the new process. When a context switch occurs, the Kernel saves the context of the old process in its Process Control Block and loads the saved context of the new process scheduled to run. Context Switch time is pure overhead, because the system does no useful work while switching. Its speed varies from machine to machine, depending on the memory speed, the number of registers that must be copied, and the existence of any special instructions (such as a single instruction to load or store all the registers). Typical speeds are less than 10 milliseconds, but tens or hundreds of times more expensive than simple function calls. Requirements:

Creating ProcessesFork System Call

A process may be create several new processes, via a create-process system

call, during the course of execution. The creating process is called a

Parent process, and new processes are called the Children of that process.

Each of these new processes may in turn create other processes forming a

tree of processes. In general, a process will need certain resources (CPU

time, memory, files, I/O devices) to accomplish its task. When a process

creates a subprocess, that subprocess may be able to obtain its resources

directly from the operating system, or it may be constrained to a subset of

resources of the parent process.

How To Use Fork? pid_t p = fork();

if (p < 0)

printf("Error\n");

else if (p == 0)

printf("Child\n");

else /* p > 0 */

printf("Parent of %d\n", p);

One interesting way to think about Wait System CallA parent process can waits for a its child process to finish or exit. waitpid (pid_t pid,int *status,..........);pid =>the process id of the child that the parent is waiting for. status => variable that will notify the parent whether the child exited successfully of not. If the parent terminates, however, all its children have assigned a new parent, the init process. Thus, the children still have a parent to collect their status and execution statistics. Exit system callVia the exit() system call, A process terminates its execution and asks

the operating system to delete all the resources that it was using. Such

as: Virtual Memory, Open Files and Input Output Buffers. However, not all

resources are released. In particular, the Process Control Block hangs

around to store the process's exit status, until the parent calls

Note that a parent needs to know the identities of its children in order to terminate their processes. In addition to above, some older systems do not allow a child to exist if its parent has terminated. In such systems, if a process terminates (either successful or unsuccessful), then all its children must also be terminated. This phenomenon is called Cascading termination, which is initiated by the operating system. |